A Learning Algorithm Is A Consistent Learner Provided

FIND-S algorithms final hypothesis will also be consistent with the negative examples provided the correct target concept is contained in H and provided the training examples are correct. Machine learning ML is the study of computer algorithms that can improve automatically through experience and by the use of data.

Forex Trading Quotes Trading Quotes Forex Trading Quotes Become A Millionaire

We will say that a learning algorithm is a consistent learner provided it outputs a hypothesis that commits zero errors over the.

A learning algorithm is a consistent learner provided. For each hypothesis h H calculate the posterior probability. Bayes Theorem provides a direct method of calculating the. Every consistent learner outputs a MAP hypothesis if we assume a uniform prior probability distribution.

H should have a low error rate on new examples drawn from the same distributionD. A learning algorithm that outputs a hypothesis that commits zero errors over the training examples. It is seen as a part of artificial intelligenceMachine learning algorithms build a model based on sample data known as training data in order to make predictions or decisions without being explicitly programmed to do so.

Repeat until 0wa lii for all i. MAP Hypotheses and Consistent Learners. We have seen that a consistent learner can be used to design a PAC-learning algorithm provided the output hypothesis comes from a class that is not too large in particular as long as the log of the size of the hypothesis class is polynomial in the required factors.

A learning algorithm is a consistent learner if it commits zero errors over the training examples. The Perceptron Learning Algorithm The perceptron learning algorithm is simple and elegant. In particular L FT jF FT F2C.

We say that a classification function cis F-L-learnable. The best previously known algorithm for computing descriptive one-variable patterns requires time On 4. Every consistent learner outputs a MAP hypothesis if we assume a uniform prior probability distribution over H Ph i Ph j for all i j and deterministic noise free.

Bayes Theorem Concept. A consistent vector learning algorithm is one in which each of the d-dimensional learning algorithms is consistent. So far we have not imposed any requirement on the hypothesis class H.

This notion of teaching is related to compression. The learning algorithm is given a set of m examples and it outputs a hypothesis h 2 H that is consistent with those examples ie correctly classifies all of them. A learning algorithm is a consistent learner if it outputs a hypothesis that commits zero errors over the training examples.

A simple learnerhuman or machinemight have difficulty remem-bering a large set of examples and finding a hypothesis. Finally a feature-vector learning algorithm Ltakes a feature set F a training set T and a vector learning algorithm and returns a classification function in C. Consistent Learner A learner is consistent if it outputs hypothesis that perfectly fits the training data This is a quite reasonable learning stragety Every consistent learning outputs a hypothesis belonging to the version space We want to know how such hypothesis generalizes.

Every consistent learner outputs a MAP hypothesis if we assume a uniform prior probability. We say that Lis an e cient consistent learner if the running time of Lis polynomial in n sizec and m. The learning algorithm is intermittently provided with labeled examples and has access to a knowledgeable teacher capable of answering membership queries.

Consistent Learners every consistent learner outputs a MAP hypothesis if we assume a uniform prior. Consistent Learners consistent learner. First we design a consistent and set-driven learner that using the concept of descriptive patterns achieves update time On 2 log n where n is the size of the input sample.

We wish to find a solution w to. An optimal teacher is then one who provides the learner with the smallest possible teaching set. We will say that a learning algorithm is a consistent learner provided it outputs a hypothesis that commits zero errors over the training examples.

Metric difference of the correct set and the conjectured set A learning algorithm L is described that correctly learns any regular set from any minimally adequate Teacher in time polynomial in the number of states of the minimum dfa for the set and the maximum length of any counterexample provided by the Teacher. Given the above analysis we can conclude that every consistent learner outputs a MAP hypothesis if we assume. The learner constructs an initial hypothesis from the given set of labeled examples and the teachers responses to membership queries.

It can be used for designing a straightforward learning algorithm Brute-Force MAP LEARNING algorithm 1. Concept in Hthat is consistent with the labeled examples fxhx. Every consistent learner outputs a MAP hypothesis if 1 we assume a uniform prior probability distribution over H and if 2 we assume.

Uniform prior probability distribution over H deterministic noise-free training data Example. Every consistent learner outputs a MAP hypothesisif we assume a uniform prior probability distribution over H ie Phi Phjfor all i j and. Maximum Likelihood Least-Squared Up.

Machine Learning Chapter 6 CSE 574 Spring 2003 MAP Hypotheses and Consistent Learners 632 A learning algorithm is a consistent learner if it outputs a hypothesis that commits zero errors over the training examples. Every consistent learner outputs a MAP hypothesis if we assume. 0 1 wa l i nii where 1ai 2 Starting withwla11 pick any example ai with wa lii0 and replace w by wla ii.

Errorhf PD fx hx. MAP Hypotheses and Consistent Learners A learning algorithm is a consistent learner if it outputs a hypothesis that commits zero errors over the training examples. 16 Version Space Theorem Version space consists of hypotheses contained in S plus those contained in G and those in-between Theorem.

According to Tom Mitchel Machine Learning 1997. Find-S outputs the maximally specific consistent hypothesis which is a. X 01 be an arbitrary target concept defined over X and let D be an arbitrary set of training examples xcx.

Let X be an arbitrary set of instances and let H be a set of boolean-valued hypotheses defined over XLet c. MAP Hypotheses and Consistent Learners Alearning algorithm is a consistent learner ifit outputs ahypothesis that commits zero errors over the training examples. A consistent learning algorithm is simply required to output a hypothesis that is consistent with all the training data provided to it.

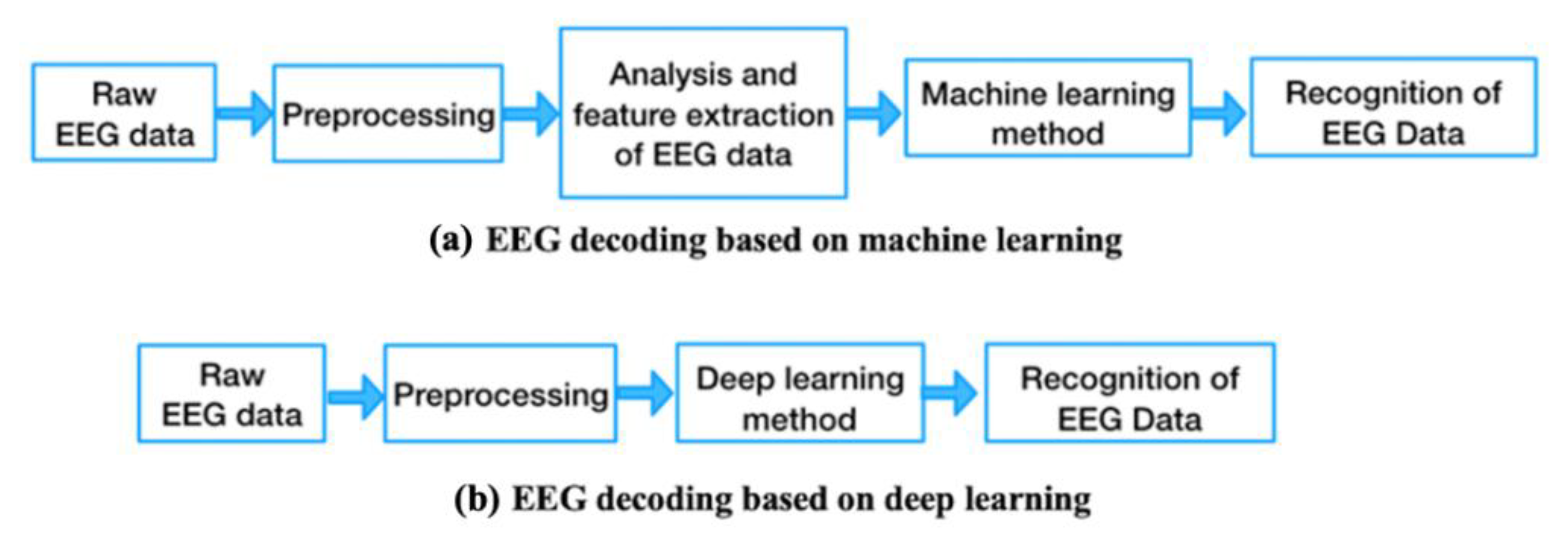

Brain Sciences Free Full Text Tsmg A Deep Learning Framework For Recognizing Human Learning Style Using Eeg Signals Html

What Is Machine Learning Inference Hazelcast

Ekg Examples Electrocardiography Cardiovascular System Ekg Cardiovascular System P Wave

Number Of The Day Worksheet Fifth Grade Math Math 5th Grade Math

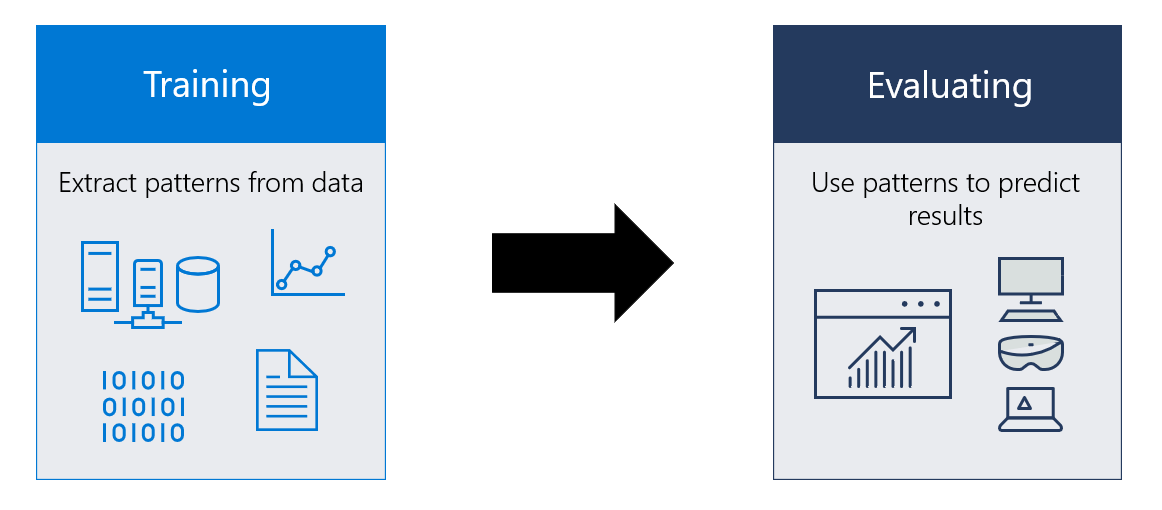

What Is A Machine Learning Model Microsoft Docs

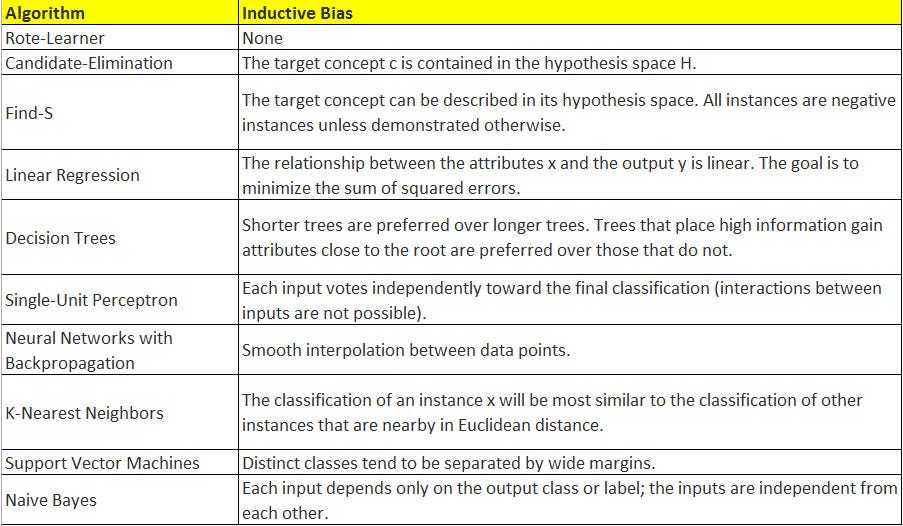

Chapter 2 Inductive Bias Part 3 By Pralhad Teggi Medium

21 Cfr Part 11 Certification Training In 2021 Training Certificate 21 Cfr Part 11 Life Science

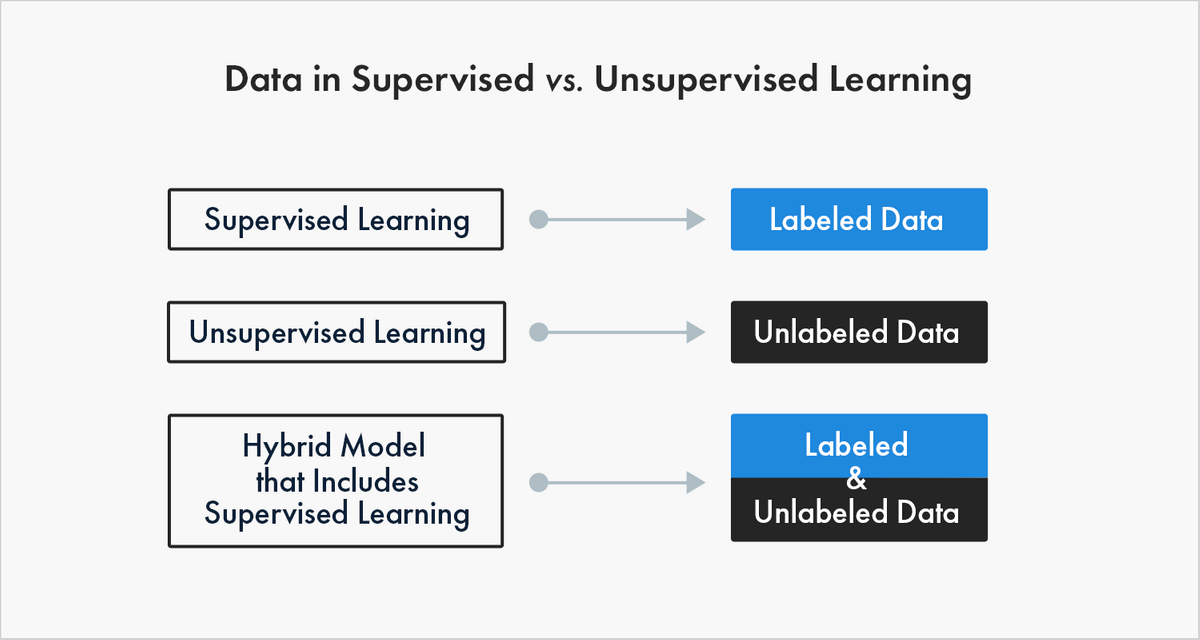

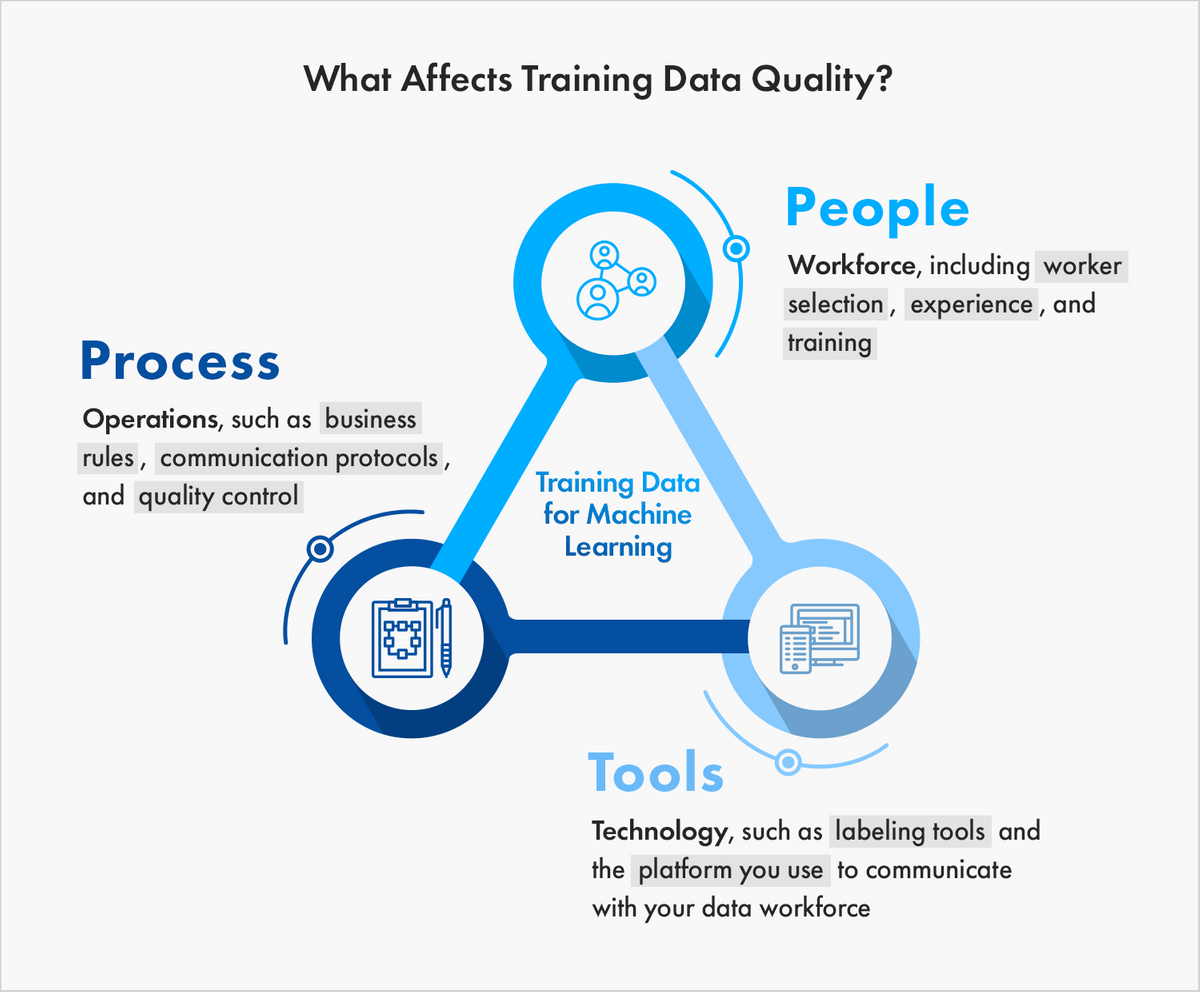

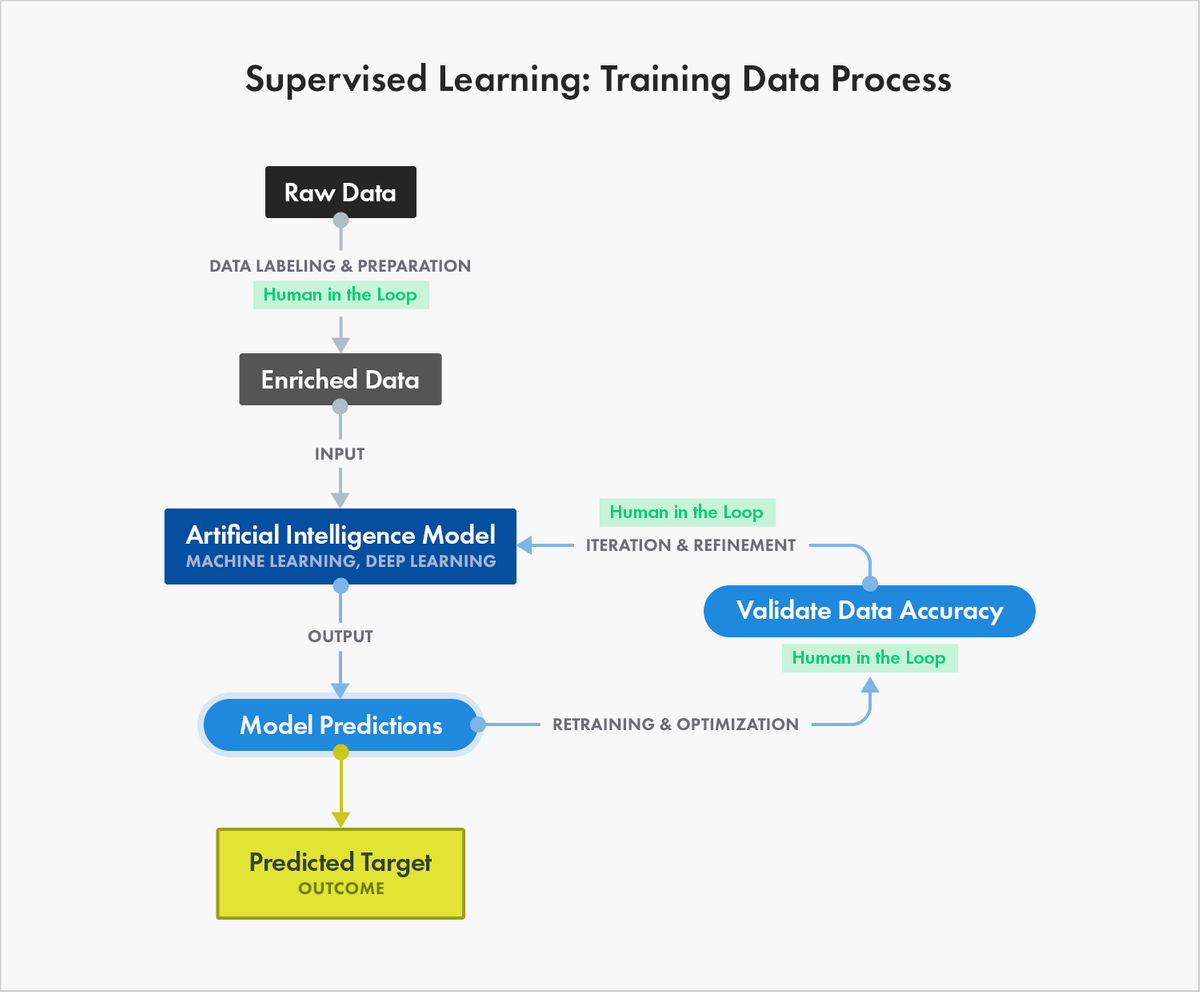

The Essential Guide To Quality Training Data For Machine Learning

Chapter 2 Inductive Bias Part 3 By Pralhad Teggi Medium

Number Of The Day Google Slides And Worksheets For 3rd Grade 3rd Grade Math Daily Math Third Grade Math

The Essential Guide To Quality Training Data For Machine Learning

General Machine Learning An Overview Sciencedirect Topics

Ensemble Classifier Machine Learning Deep Learning Machine Learning Data Science

Full Article Using Supervised Machine Learning On Large Scale Online Forums To Classify Course Related Facebook Messages In Predicting Learning Achievement Within The Personal Learning Environment

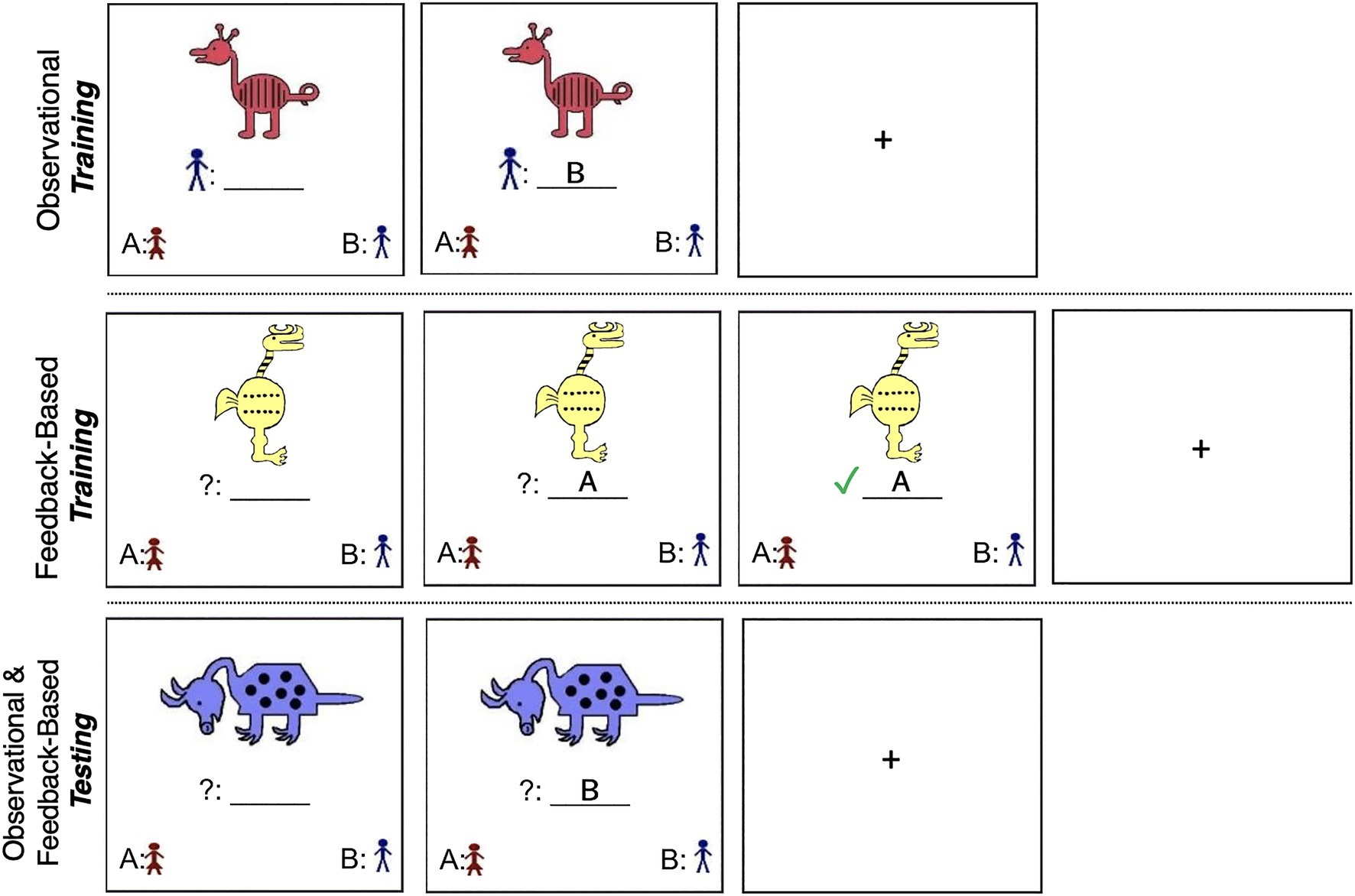

Frontiers Strategy Development And Feedback Processing During Complex Category Learning Psychology

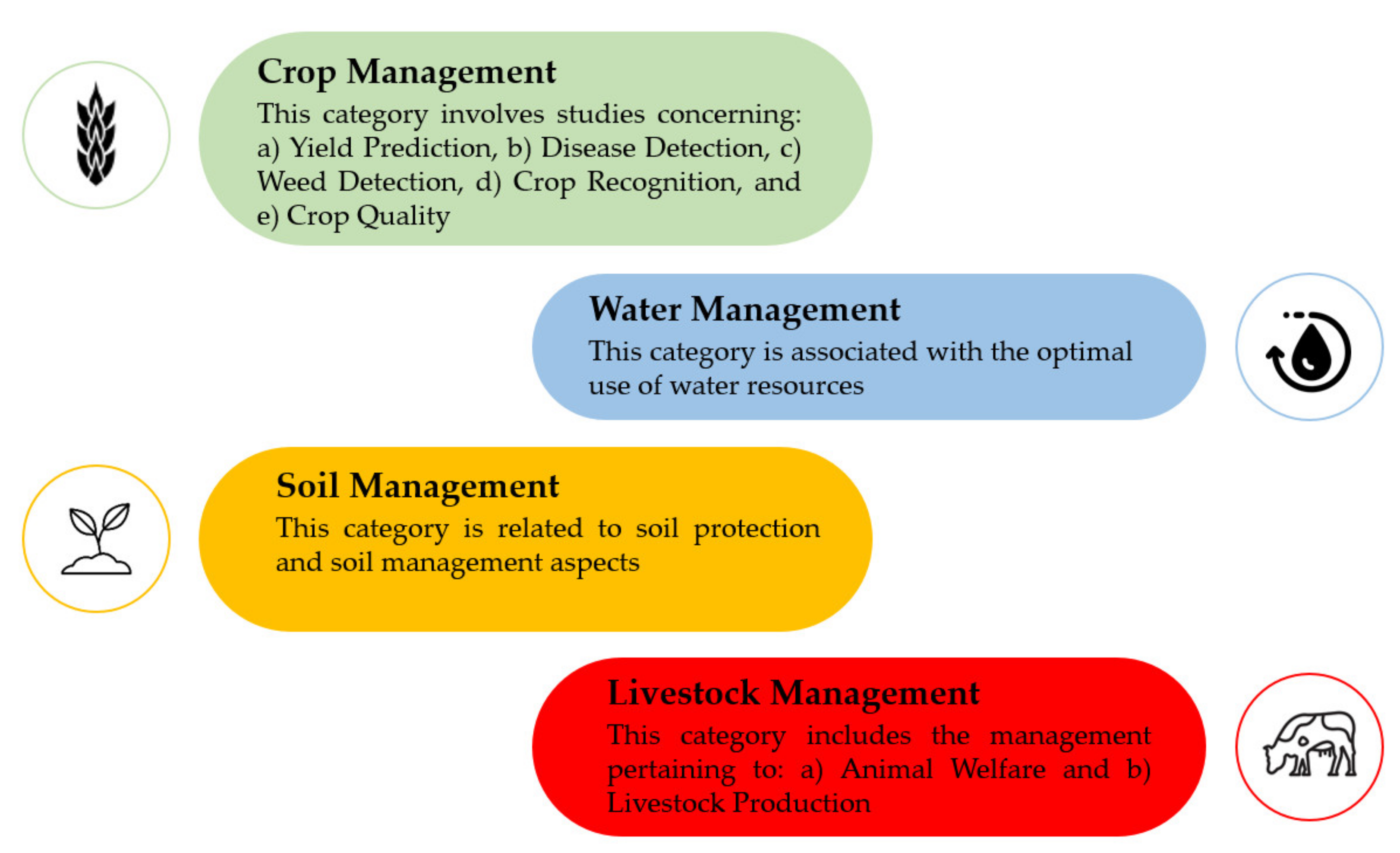

Sensors Free Full Text Machine Learning In Agriculture A Comprehensive Updated Review Html

The Essential Guide To Quality Training Data For Machine Learning

Komentar

Posting Komentar